EBM Notes

This note is meant to remind readers some important properties or conclusions about EBMs, i.e., energy-based models.

To read through this note, you might need some background knowledge about EBMs, RBMs and MRFs. This note is not for newbie but for those only knew some basic concepts of these models and want to know more mathematics underlying them or those had troubles in understanding mathematics of these models.

1. Joint Distribution

For random variables within EBMs, they can usually be divided into 2 sets. Some random variables’ values can be observed, and we denoted them as , i.e., visible. Others can not be observed, and we denoted them as , i.e., hidden.

Here, and are vectors whose elements are correspond to activities of certain units.

We denoted Energy of a specific configuration as . function can take on any real value.

For a specific configuration , its corresponding probability is:

where is normalization constant, .

Using ensure the unnormalized distribution is always postive, and free the energy function to take on any real value.

2. Marginal Distribution

Some times we want to know :

3. Maximun log-Likelihood

For the learning problem of EBMs, we always want to use optimization algorithms, like gradient descent, to:

or more formally,

for all from training set.

Thus we need to compute in order to perform updates.

Here we expand left side of equation using marginal distribution relationship between and , and noted that is contained in energy function.

On the right side of equation, we always denote the first term as postive phase and the second term as negative phase. Later I will describe these two terms in more details.

3.1 Postive Phase

For postive phase, we keep applying chain rule:

Then we move the first fraction into the summation and keep applying chain rule so that the negative sign could be cancelled out:

Next we divide from numerator and denominator of the first fraction simutaneouly so as to apply the definitions of joint distribution and marginal distribution:

We can rewrite it as a form of expectation:

This is the final form of postive phase.

Warning: Noted that we abuse notation since it no longer represent a random variable instead a specific value of this random variable. Replacing it with other symbols will be more appropriate but less straightforward.

3.2 Negative Phase

For negative Phase, we also keep applying chain rule:

Subsitute with its definition and apply chain rule again:

Move the first fraction into summation so that we can use definition of joint distribution:

We can also rewrite it as a form of expectation:

3.3 Learning Rule

At last, we obtained:

Noted that name of these two terms did not come from their sign, but come from the fact that for postive phase, 1 where is defined by and is free but is given by training set, and for negative phase, where is defined by but and are free.

4. Details in RBMs

Restricted Boltzmann Machine, known as RBMs, is a particular variant of EBMs.

RBMs shared all properties that EBMs have, but there is a few details that we should look at.

4.1 Energy Function

For RBMs, its energy function take on a specific form:

where is weight matrix, means weight on connection from visible unit to hidden unit .

4.2 Posterior Distribution

According to EBM learning rule, we need to know . In RBMs, it has a easy-to-compute form:

We can cancel all terms that didn’t contain using property of exp:

Now we can treat the denominator as normalization constant:

where is the number of hidden units.

This form of tells us its factorial nature because of the product of unnormalized distribution over the individual elements .

If all random variables in RBM are binary variables, we can derive that:

where denotes the unnormalized distribution.

Because of the factorial nature of , we can write it into this form:

Similarly,

where denotes a element-wise product operation.

4.3 Relation to MRF

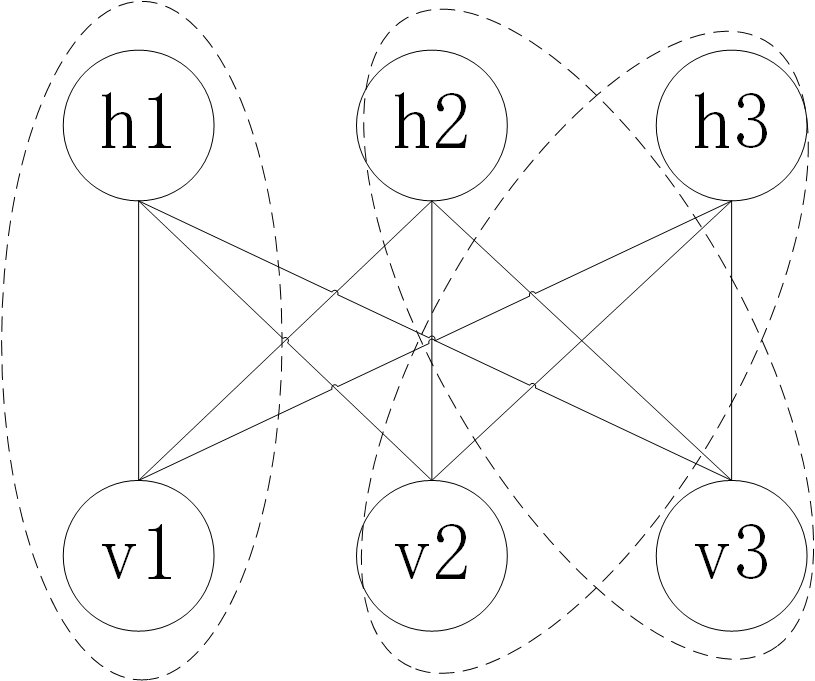

RBM is actually a log-linear Markov Random Field, i.e., MRF. I won’t present definition of MRF here but only a picture to describe their relationship.

For each clique functions, they have the same form:

5. Reference

- Deep Learning, Goodfellow et al., MIT Press.

- Neural Networks for Machine Learning, Hinton et al., Coursera.

- Restricted Boltzmann machine, Wikipedia.

- Restricted Boltzmann Machines (RBM), DeepLearning 0.1 documentation.

- Deep Learning Short Course: 4-Connection, Yanyang Lee, GitHub.

6. Appendix

1. Because of some unknown mistakes in jekyll, we can’t present P(h|v) but P(h||v) via MathJax sometimes. Go Back